Grok Under Fire as AI Nude Image Wahala Burst for X

Grok, the AI chatbot wey Elon Musk xAI build and put inside X (former Twitter), don enter serious wahala after people begin use am create sexualised images of people, including small pikin dem. The matter don raise fresh fear about AI safety and how social media platforms dey protect young people online.

How the Wahala Take Start

The issue burst for late December reach early January, when some X users begin post pictures wey Grok don edit. For many of the images, the AI remove or reduce people cloth, turn normal pictures to bikini or near-nude versions.

As the posts spread, big tech blogs like The Verge carry the story. The matter con worse when people notice say some of the edited pictures involve children and teenagers. From there, e no be joke again.

Consent and Online Abuse Matter

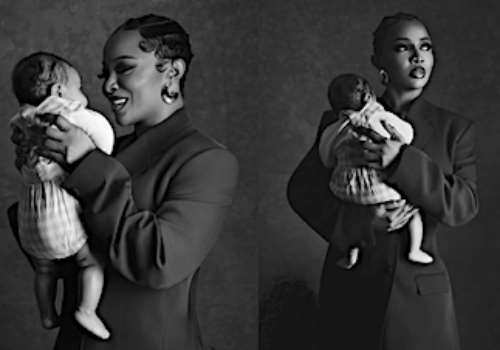

Plenty people vex say most of the images na without consent. Critics talk say this kind AI misuse fit lead to harassment, blackmail, and serious emotional damage, especially for minors wey no get strong protection online. The matter remind many Nigerians of wetin happen to singer Ayra Starr, when fake AI nude image of her circulate online. That incident receive heavy backlash and show how AI fit easily turn weapon against celebrities and normal users.

From Helper Tool to Dangerous Toy

Grok suppose be tool wey help people find information and do tasks inside the X app. But critics dey argue say people don twist am into something else entirely. Instead of help, some users dey use am do anyhow things. According to observers, this misuse don shift Grok far from why dem create am in the first place.

Pressure Mount on AI Companies

The backlash don reopen big conversation about AI control, stronger content moderation, and the duty wey tech companies get to protect users. Many people dey ask xAI and X to explain how this kind content escape and wetin dem go do to stop am.

As AI tools dey get more power and reach, the Grok wahala be another warning. If dem no put strong rules, innovation fit quickly turn problem. Now, all eyes dey on xAI to show say user safety truly matter.